Sample Solution for Assignment 2 (Total: 50 marks)

Due Friday, Feb. 24,

- (6 marks) Exercise 4.6

The heuristic h = h1 + h2 (adding misplaced tiles and

f(n) = g(n) + h(n)

≤ g(n) + h*(n) + c

≤ C* + c

≤ g(G2)

so G2 will never

be expanded before an optimal goal is expanded.

- (6 marks) Exercise 4.9

The misplaced-tiles heuristic is exact for the problem where a tile can move from square A to square B. As this is a relaxation of the condition that a tile can move from square A to square B if B is blank, Gaschnig’s heuristic cannot be less than the misplaced-tiles heuristic. As it is also admissible (being exact for a relaxation of the original problem), Gaschnig’s heuristic is therefore more accurate.

If we permute two

adjacent tiles in the goal state, we have a state where misplaced-tiles and

To compute Gaschnig’s heuristic, repeat the following until the goal

state is reached: let B be the current location of the blank; if B is occupied

by tile X (not the blank) in the goal state, move X to B; otherwise, move any

misplaced tile to B. Students could be asked to prove that this is the optimal

solution to the relaxed problem.

- (8 marks) Exercise 4.11

- (2 marks) Local beam search with k = 1 is hill-climbing search.

- (2 marks) Local beam search with k = ∞. The idea is that if every successor

is retained (because k is

unbounded), then the search resembles breadth-first search in that it

adds one complete layer of nodes before adding the next layer. Starting

from one state, the algorithm would be essentially identical to

breadth-first search except that each layer is generated all at once.

- (2 marks) Simulated annealing with T = 0 at all times: ignoring the fact that

the termination step would be triggered immediately, the search would be

identical to first-choice hill climb ing because every downward successor would

be rejected with probability 1.

- (2 marks) Genetic algorithm with population size N = 1: if the population size is 1, then the

two selected parents will be the same individual; crossover yields an

exact copy of the individual; then there is a small chance of mutation.

Thus, the algorithm executes a random walk in the space of individuals.

- (5 marks) Exercise 4.12

If we assume the comparison function is transitive, then

we can always sort the nodes using it, and choose the node that is at the top

of the sort. Efficient priority queue data structures rely only on comparison

operations, so we lose nothing in efficiency—except for the fact that the comparison operation on states may be much more

expensive than comparing two numbers, each of which can be computed just once.

A* relies on the division of the total cost

estimate f(n) into the

cost-so-far and the cost-to- go. If we have comparison operators for each of

these, then we can prefer to expand a node that is better than other nodes on

both comparisons. Unfortunately, there will usually be no such node. The

tradeoff between g(n) and h(n) cannot be realized without numerical values.

- (10 marks) Exercise 6.1

- (2 marks) 9!

- (2

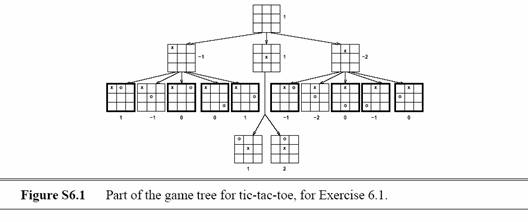

marks) Figure S6.1 shows the game tree, with the evaluation

function values below the terminal nodes and the backed-up values to the

right of the non

terminal nodes. The values imply that the best starting

move for X is to take the center. The terminal nodes with a bold outline

are the ones that do not need to be evaluated, assuming the optimal

ordering.

- (2

marks) See b.

- (2 marks)

- (2 marks)

- (5 marks) Exercise 6.2

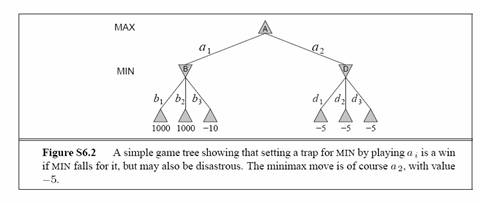

Consider a MIN node whose children are terminal nodes. If MIN plays suboptimally, then

the value of the node is greater than or equal to the value it would have if MIN played

optimally. Hence, the value of the MAX node that is the MIN

node’s parent can only be increased. This

argument can be extended by a simple induction all the way to the root. If

the suboptimal play by MIN is predictable,

then one can do better than a minimax strategy. For

example, if MIN always falls for a certain kind of trap and loses,

then setting the trap guarantees a win even if there is actually a devastating

response for MIN. This is shown in Figure S6.2.

- (10 marks) Write your own tic-tac-toe program with techniques discussed in class. Your program should be able to play n by n boards with m in a row. Do not submit code; rather, answer:

- (2 marks) Describe the algorithms and techniques you used in your program.

- (2 marks) Which techniques were most useful?

- (2 marks) For what values of n and m can you solve the entire game tree?

- (2 marks) How many nodes do these scenarios generate?

- (2 marks) Do optimal players tie in these scenarios or is one player guaranteed to win? If one player wins, which one?