This feed contains pages with tag "planet".

Context

- See hibernate-pocket

Testing continued

- following a suggestion of

gordon1, unload the mediatek module first. The following seems to work, either from the console or under sway

echo devices > /sys/power/pm_test

echo reboot > /sys/power/disk

rmmod mt76x2u

echo disk > /sys/power/state

modprobe mt76x2u

- It even works via ssh (on wired ethernet) if you are a bit more patient for it to come back.

- replacing "reboot" with "shutdown" doesn't seem to affect test mode.

- replacing "devices" with "platform" (or "processors") leads to unhappiness.

- under sway, the screen goes blank, and it does not resume

- same on console

Configuration

script: https://docs.kernel.org/power/basic-pm-debugging.html

kernel is 6.15.4-1~exp1+reform20250628T170930Z

State of things

normal reboot works

Either from the console, or from sway, the intial test of reboot mode hibernate fails. In both cases it looks very similar to halting.

- the screen is dark (but not completely black)

- the keyboard is still illuminated

- the system-controller still seems to work, althought I need to power off before I can power on again, and any "hibernation state" seems lost.

Running tests

- this is 1a from above

- freezer test passes

- devices test from console

- console comes back (including input)

- networking (both wired and wifi) seems wedged.

- console is full of messages from mt76x2u about vendor request 06 and 07 failing. This seems related to https://github.com/morrownr/7612u/issues/17

- at some point the console becomes non-responsive, except for the aforementioned messages from the wifi module.

- devices test under sway

- display comes back

- keyboard/mouse seem disconnected

- network down / disconnected?

My web pages are (still) in ikiwiki, but lately I have started authoring things like assignments and lectures in org-mode so that I can have some literate programming facilities. There is is org-mode export built-in, but it just exports source blocks as examples (i.e. unhighlighted verbatim). I added a custom exporter to mark up source blocks in a way ikiwiki can understand. Luckily this is not too hard the second time.

(with-eval-after-load "ox-md"

(org-export-define-derived-backend 'ik 'md

:translate-alist '((src-block . ik-src-block))

:menu-entry '(?m 1 ((?i "ikiwiki" ik-export-to-ikiwiki)))))

(defun ik-normalize-language (str)

(cond

((string-equal str "plait") "racket")

((string-equal str "smol") "racket")

(t str)))

(defun ik-src-block (src-block contents info)

"Transcode a SRC-BLOCK element from Org to beamer

CONTENTS is nil. INFO is a plist used as a communication

channel."

(let* ((body (org-element-property :value src-block))

(lang (ik-normalize-language (org-element-property :language src-block))))

(format "[[!format <span class="error">Error: unsupported page format %s</span>]]" lang body)))

(defun ik-export-to-ikiwiki

(&optional async subtreep visible-only body-only ext-plist)

"Export current buffer as an ikiwiki markdown file.

See org-md-export-to-markdown for full docs"

(require 'ox)

(interactive)

(let ((file (org-export-output-file-name ".mdwn" subtreep)))

(org-export-to-file 'ik file

async subtreep visible-only body-only ext-plist)))

See web-stacker for the background.

yantar92 on #org-mode pointed out that a derived backend would be

a cleaner solution. I had initially thought it was too complicated, but I have to agree the example in the org-mode documentation does

pretty much what I need.

This new approach has the big advantage that the generation of URLs happens at export time, so it's not possible for the displayed program code and the version encoded in the URL to get out of sync.

;; derived backend to customize src block handling

(defun my-beamer-src-block (src-block contents info)

"Transcode a SRC-BLOCK element from Org to beamer

CONTENTS is nil. INFO is a plist used as a communication

channel."

(let ((attr (org-export-read-attribute :attr_latex src-block :stacker)))

(concat

(when (or (not attr) (string= attr "both"))

(org-export-with-backend 'beamer src-block contents info))

(when attr

(let* ((body (org-element-property :value src-block))

(table '(? ?\n ?: ?/ ?? ?# ?[ ?] ?@ ?! ?$ ?& ??

?( ?) ?* ?+ ?, ?= ?%))

(slug (org-link-encode body table))

(simplified (replace-regexp-in-string "[%]20" "+" slug nil 'literal)))

(format "\n\\stackerlink{%s}" simplified))))))

(defun my-beamer-export-to-latex

(&optional async subtreep visible-only body-only ext-plist)

"Export current buffer as a (my)Beamer presentation (tex).

See org-beamer-export-to-latex for full docs"

(interactive)

(let ((file (org-export-output-file-name ".tex" subtreep)))

(org-export-to-file 'my-beamer file

async subtreep visible-only body-only ext-plist)))

(defun my-beamer-export-to-pdf

(&optional async subtreep visible-only body-only ext-plist)

"Export current buffer as a (my)Beamer presentation (PDF).

See org-beamer-export-to-pdf for full docs."

(interactive)

(let ((file (org-export-output-file-name ".tex" subtreep)))

(org-export-to-file 'my-beamer file

async subtreep visible-only body-only ext-plist

#'org-latex-compile)))

(with-eval-after-load "ox-beamer"

(org-export-define-derived-backend 'my-beamer 'beamer

:translate-alist '((src-block . my-beamer-src-block))

:menu-entry '(?l 1 ((?m "my beamer .tex" my-beamer-export-to-latex)

(?M "my beamer .pdf" my-beamer-export-to-pdf)))))

An example of using this in an org-document would as below. The first source code block generates only a link in the output while the last adds a generated link to the normal highlighted source code.

* Stuff

** Frame

#+attr_latex: :stacker t

#+NAME: last

#+BEGIN_SRC stacker :eval no

(f)

#+END_SRC

#+name: smol-example

#+BEGIN_SRC stacker :noweb yes

(defvar x 1)

(deffun (f)

(let ([y 2])

(deffun (h)

(+ x y))

(h)))

<<last>>

#+END_SRC

** Another Frame

#+ATTR_LATEX: :stacker both

#+begin_src smol :noweb yes

<<smol-example>>

#+end_src

The Emacs part is superceded by a cleaner approach

I the upcoming term I want to use KC Lu's web based stacker tool.

The key point is that it takes (small) programs encoded as part of the url.

Yesterday I spent some time integrating it into my existing

org-beamer workflow.

In my init.el I have

(defun org-babel-execute:stacker (body params)

(let* ((table '(? ?\n ?: ?/ ?? ?# ?[ ?] ?@ ?! ?$ ?& ??

?( ?) ?* ?+ ?, ?= ?%))

(slug (org-link-encode body table))

(simplified (replace-regexp-in-string "[%]20" "+" slug nil 'literal)))

(format "\\stackerlink{%s}" simplified)))

This means that when I "execute" the block below with C-c C-c, it updates the link, which is then embedded in the slides.

#+begin_src stacker :results value latex :exports both

(deffun (f x)

(let ([y 2])

(+ x y)))

(f 7)

#+end_src

#+RESULTS:

#+begin_export latex

\stackerlink{%28deffun+%28f+x%29%0A++%28let+%28%5By+2%5D%29%0A++++%28%2B+x+y%29%29%29%0A%28f+7%29}

#+end_export

The \stackerlink macro is probably fancier than needed. One could

just use \href from hyperref.sty, but I wanted to match the

appearence of other links in my documents (buttons in the margins).

This is based on a now lost answer from stackoverflow.com;

I think it wasn't this one, but you get the main idea: use \hyper@normalise.

\makeatletter

% define \stacker@base appropriately

\DeclareRobustCommand*{\stackerlink}{\hyper@normalise\stackerlink@}

\def\stackerlink@#1{%

\begin{tikzpicture}[overlay]%

\coordinate (here) at (0,0);%

\draw (current page.south west |- here)%

node[xshift=2ex,yshift=3.5ex,fill=magenta,inner sep=1pt]%

{\hyper@linkurl{\tiny\textcolor{white}{stacker}}{\stacker@base?program=#1}}; %

\end{tikzpicture}}

\makeatother

Problem description(s)

For some of its cheaper dedicated servers, OVH does not provide a KVM (in the virtual console sense) interface. Sometimes when a virtual console is provided, it requires a horrible java applet that won't run on modern systems without a lot of song and dance. Although OVH provides a few web based ways of installing,

- I prefer to use the debian installer image I'm used to and trust, and

- I needed some way to debug a broken install.

I have only tested this in the OVH rescue environment, but the general approach should work anywhere the rescue environment can install and run QEMU.

QEMU to the rescue

Initially I was horrified by the ovh forums post but eventually I realized it not only gave a way to install from a custom ISO, but provided a way to debug quite a few (but not all, as I discovered) boot problems by using the rescue env (which is an in-memory Debian Buster, with an updated kernel). The original solution uses VNC but that seemed superfluous to me, so I modified the procedure to use a "serial" console.

Preliminaries

- Set up a default ssh key in the OVH web console

- (re)boot into rescue mode

- ssh into root@yourhost (you might need to ignore changing host keys)

- cd /tmp

- You will need qemu (and may as well use kvm).

ovmfis needed for a UEFI bios.

apt install qemu-kvm ovmf

- Download the netinstaller iso

Download vmlinuz and initrd.gz that match your iso. In my case:

https://deb.debian.org/debian/dists/testing/main/installer-amd64/current/images/cdrom/

Doing the install

- Boot the installer in qemu. Here the system has two hard drives visible as /dev/sda and /dev/sdb.

qemu-system-x86_64 \

-enable-kvm \

-nographic \

-m 2048 \

-bios /usr/share/ovmf/OVMF.fd \

-drive index=0,media=disk,if=virtio,file=/dev/sda,format=raw \

-drive index=1,media=disk,if=virtio,file=/dev/sdb,format=raw \

-cdrom debian-bookworm-DI-alpha2-amd64-netinst.iso \

-kernel ./vmlinuz \

-initrd ./initrd.gz \

-append console=ttyS0,9600,n8

- Optionally follow Debian wiki to configure root on software raid.

- Make sure your disk(s) have an ESP partition.

- qemu and d-i are both using Ctrl-a as a prefix, so you need to C-a C-a 1 (e.g.) to switch terminals

- make sure you install ssh server, and a user account

Before leaving the rescue environment

- You may have forgotten something important, no problem you can boot the disks you just installed in qemu (I leave the apt here for convenient copy pasta in future rescue environments).

apt install qemu-kvm ovmf && \

qemu-system-x86_64 \

-enable-kvm \

-nographic \

-m 2048 \

-bios /usr/share/ovmf/OVMF.fd \

-drive index=0,media=disk,if=virtio,file=/dev/sda,format=raw \

-drive index=1,media=disk,if=virtio,file=/dev/sdb,format=raw \

-nic user,hostfwd=tcp:127.0.0.1:2222-:22 \

-boot c

One important gotcha is that the installer guess interface names based on the "hardware" it sees during the install. I wanted the network to work both in QEMU and in bare hardware boot, so I added a couple of link files. If you copy this, you most likely need to double check the PCI paths. You can get this information, e.g. from udevadm, but note you want to query in rescue env, not in QEMU, for the second case.

/etc/systemd/network/50-qemu-default.link

[Match]

Path=pci-0000:00:03.0

Virtualization=kvm

[Link]

Name=lan0

/etc/systemd/network/50-hardware-default.link

[Match]

Path=pci-0000:03:00.0

Virtualization=no

[Link]

Name=lan0

- Then edit

/etc/network/interfacesto refer tolan0

Spiffy new terminal emulators seem to come with their own terminfo

definitions. Venerable hosts that I ssh into tend not to know about

those. kitty comes with a thing to transfer that definition, but it

breaks if the remote host is running tcsh (don't ask). Similary the

one liner for alacritty on the arch wiki seems to assume the remote

shell is bash. Forthwith, a dumb shell script that works to send the

terminfo of the current terminal emulator to the remote host.

EDIT: Jakub Wilk worked out this can be replaced with the oneliner

infocmp | ssh $host tic -x -

#!/bin/sh

if [ "$#" -ne 1 ]; then

printf "usage: sendterminfo host\n"

exit 1

fi

host="$1"

filename=$(mktemp terminfoXXXXXX)

cleanup () {

rm "$filename"

}

trap cleanup EXIT

infocmp > "$filename"

remotefile=$(ssh "$host" mktemp)

scp -q "$filename" "$host:$remotefile"

ssh "$host" "tic -x \"$remotefile\""

ssh "$host" rm "$remotefile"

Unfortunately schroot does not maintain CPU affinity 1. This means in

particular that parallel builds have the tendency to take over an

entire slurm managed server, which is kindof rude. I haven't had

time to automate this yet, but following demonstrates a simple

workaround for interactive building.

╭─ simplex:~

╰─% schroot --preserve-environment -r -c polymake

(unstable-amd64-sbuild)bremner@simplex:~$ echo $SLURM_CPU_BIND_LIST

0x55555555555555555555

(unstable-amd64-sbuild)bremner@simplex:~$ grep Cpus /proc/self/status

Cpus_allowed: ffff,ffffffff,ffffffff

Cpus_allowed_list: 0-79

(unstable-amd64-sbuild)bremner@simplex:~$ taskset $SLURM_CPU_BIND_LIST bash

(unstable-amd64-sbuild)bremner@simplex:~$ grep Cpus /proc/self/status

Cpus_allowed: 5555,55555555,55555555

Cpus_allowed_list: 0,2,4,6,8,10,12,14,16,18,20,22,24,26,28,30,32,34,36,38,40,42,44,46,48,50,52,54,56,58,60,62,64,66,68,70,72,74,76,78

Next steps

In principle the schroot configuration parameter can be used to run

taskset before every command. In practice it's a bit fiddly because

you need a shell script shim (because the environment variable) and

you need to e.g. goof around with bind mounts to make sure that your

script is available in the chroot. And then there's combining with

ccache and eatmydata...

Background

So apparently there's this pandemic thing, which means I'm teaching "Alternate Delivery" courses now. These are just like online courses, except possibly more synchronous, definitely less polished, and the tuition money doesn't go to the College of Extended Learning. I figure I'll need to manage share videos, and our learning management system, in the immortal words of Marie Kondo, does not bring me joy. This has caused me to revisit the problem of sharing large files in an ikiwiki based site (like the one you are reading).

My goto solution for large file management is

git-annex. The last time I looked

at this (a decade ago or so?), I was blocked by git-annex using

symlinks and ikiwiki ignoring them for security related reasons.

Since then two things changed which made things relatively easy.

I started using the

rsync_commandikiwiki option to deploy my site.git-annexwent through several design iterations for allowing non-symlink access to large files.

TL;DR

In my ikiwiki config

# attempt to hardlink source files? (optimisation for large files)

hardlink => 1,

In my ikiwiki git repo

$ git annex init

$ git annex add foo.jpg

$ git commit -m'add big photo'

$ git annex adjust --unlock # look ikiwiki, no symlinks

$ ikiwiki --setup ~/.config/ikiwiki/client # rebuild my local copy, for review

$ ikiwiki --setup /home/bremner/.config/ikiwiki/rsync.setup --refresh # deploy

You can see the result at photo

I have lately been using org-mode literate programming to generate

example code and beamer slides from the same source. I hit a wall

trying to re-use functions in multiple files, so I came up with the

following hack. Thanks 'ngz' on #emacs and Charles Berry on the

org-mode list for suggestions and discussion.

(defun db-extract-tangle-includes ()

(goto-char (point-min))

(let ((case-fold-search t)

(retval nil))

(while (re-search-forward "^#[+]TANGLE_INCLUDE:" nil t)

(let ((element (org-element-at-point)))

(when (eq (org-element-type element) 'keyword)

(push (org-element-property :value element) retval))))

retval))

(defun db-ob-tangle-hook ()

(let ((includes (db-extract-tangle-includes)))

(mapc #'org-babel-lob-ingest includes)))

(add-hook 'org-babel-pre-tangle-hook #'db-ob-tangle-hook t)

Use involves something like the following in your org-file.

#+SETUPFILE: presentation-settings.org

#+SETUPFILE: tangle-settings.org

#+TANGLE_INCLUDE: lecture21.org

#+TITLE: GC V: Mark & Sweep with free list

For batch export with make, I do something like [[!format Error: unsupported page format make]]

What?

I previously posted about my extremely quick-and-dirty buildinfo database using buildinfo-sqlite. This year at DebConf, I re-implimented this using PostgreSQL backend, added into some new features.

There is already buildinfo and buildinfos. I was informed I need to think up a name that clearly distinguishes from those two. Thus I give you builtin-pho.

There's a README for how to set up a local database. You'll need 12GB of disk space for the buildinfo files and another 4GB for the database (pro tip: you might want to change the location of your PostgreSQL data_directory, depending on how roomy your /var is)

Demo 1: find things build against old / buggy Build-Depends

select distinct p.source,p.version,d.version, b.path

from

binary_packages p, builds b, depends d

where

p.suite='sid' and b.source=p.source and

b.arch_all and p.arch = 'all'

and p.version = b.version

and d.id=b.id and d.depend='dh-elpa'

and d.version < debversion '1.16'

Demo 2: find packages in sid without buildinfo files

select distinct p.source,p.version

from

binary_packages p

where

p.suite='sid'

except

select p.source,p.version

from binary_packages p, builds b

where

b.source=p.source

and p.version=b.version

and ( (b.arch_all and p.arch='all') or

(b.arch_amd64 and p.arch='amd64') )

Disclaimer

Work in progress by an SQL newbie.

1 Background

Apparently motivated by recent phishing attacks against @unb.ca

addresses, UNB's Integrated Technology Services unit (ITS) recently

started adding banners to the body of email messages. Despite

(cough) several requests, they have been unable and/or unwilling to

let people opt out of this. Recently ITS has reduced the size of

banner; this does not change the substance of what is discussed here.

In this blog post I'll try to document some of the reasons this

reduces the utility of my UNB email account.

2 What do I know about email?

I have been using email since 1985 1. I have administered my own UNIX-like systems since

the mid 1990s. I am a Debian Developer 2.

Debian is a mid-sized organization (there are more Debian Developers

than UNB faculty members) that functions mainly via email (including

discussions and a bug tracker). I maintain a mail user agent

(informally, an email client) called notmuch

3. I administer my own (non-UNB) email

server. I have spent many hours reading RFCs 4.

In summary, my perspective might be different than an enterprise email

adminstrator, but I do know something about the nuts and bolts of how

email works.

3 What's wrong with a helpful message?

3.1 It's a banner ad.

I don't browse the web without an ad-blocker and I don't watch TV with advertising in it. Apparently the main source of advertising in my life is a service provided by my employer. Some readers will probably dispute my description of a warning label inserted by an email provider as "advertising". Note that is information inserted by a third party to promote their own (well intentioned) agenda, and inserted in an intentionally attention grabbing way. Advertisements from charities are still advertisements. Preventing phishing attacks is important, but so are an almost countless number of priorities of other units of the University. For better or worse those units are not so far able to insert messages into my email. As a thought experiment, imagine inserting a banner into every PDF file stored on UNB servers reminding people of the fiscal year end.

3.2 It makes us look unprofessional.

Because the banner is contained in the body of email messages, it almost inevitably ends up in replies. This lets funding agencies, industrial partners, and potential graduate students know that we consider them as potentially hostile entities. Suggesting that people should edit their replies is not really an acceptable answer, since it suggests that it is acceptable to download the work of maintaining the previous level of functionality onto each user of the system.

3.3 It doesn't help me

I have an archive of 61270 email messages received since 2003. Of

these 26215 claim to be from a unb.ca address 5. So historically

about 42% of the mail to arrive at my UNB mailbox is internal 6. This means that warnings will occur

in the majority of messages I receive. I think the onus is on the

proposer to show that a warning that occurs in the large majority of

messages will have any useful effect.

3.4 It disrupts my collaboration with open-source projects

Part of my job is to collaborate with various open source projects. A

prominent example is Eclipse OMR 7,

the technological driver for a collaboration with IBM that has brought

millions of dollars of graduate student funding to UNB. Git is now

the dominant version control system for open source projects, and one

popular way of using git is via git-send-email 8

Adding a banner breaks the delivery of patches by this method. In the a previous experiment I did about a month ago, it "only" caused the banner to end up in the git commit message. Those of you familiar with software developement will know that this is roughly the equivalent of walking out of the bathroom with toilet paper stuck to your shoe. You'd rather avoid it, but it's not fatal. The current implementation breaks things completely by quoted-printable re-encoding the message. In particular '=' gets transformed to '=3D' like the following

-+ gunichar *decoded=g_utf8_to_ucs4_fast (utf8_str, -1, NULL); -+ const gunichar *p = decoded; ++ gunichar *decoded=3Dg_utf8_to_ucs4_fast (utf8_str, -1, NULL);

I'm not currently sure if this is a bug in git or some kind of failure in the re-encoding. It would likely require an investment of several hours of time to localize that.

3.5 It interferes with the use of cryptography.

Unlike many people, I don't generally read my email on a phone. This

means that I don't rely on the previews that are apparently disrupted

by the presence of a warning banner. On the other hand I do send and

receive OpenPGP signed and encrypted messages. The effects of the

banner on both signed and encrypted messages is similar, so I'll stick

to discussing signed messages here. There are two main ways of signing

a message. The older method, still unfortunately required for some

situations is called "inline PGP". The signed region is re-encoded,

which causes gpg to issue a warning about a buggy MTA 9, namely gpg: quoted printable character in armor -

probably a buggy MTA has been used. This is not exactly confidence

inspiring. The more robust and modern standard is PGP/MIME. Here the

insertion of a banner does not provoke warnings from the cryptography

software, but it does make it much harder to read the message (before

and after screenshots are given below). Perhaps more importantly it

changes the message from one which is entirely signed or encrypted

10, to

one which is partially signed or encrypted. Such messages were

instrumental in the EFAIL exploit 11 and will

probably soon be rejected by modern email clients.

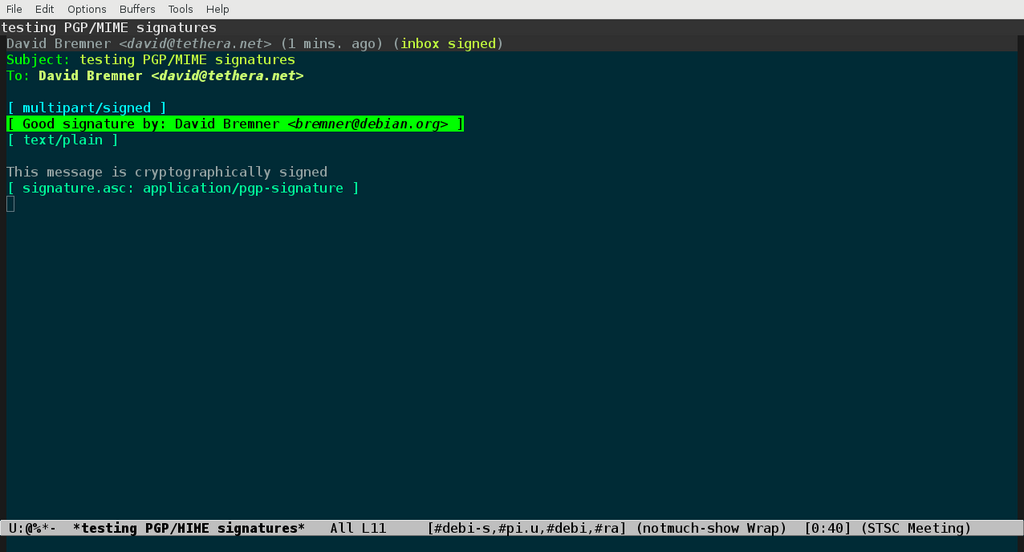

Figure 1: Intended view of PGP/MIME signed message

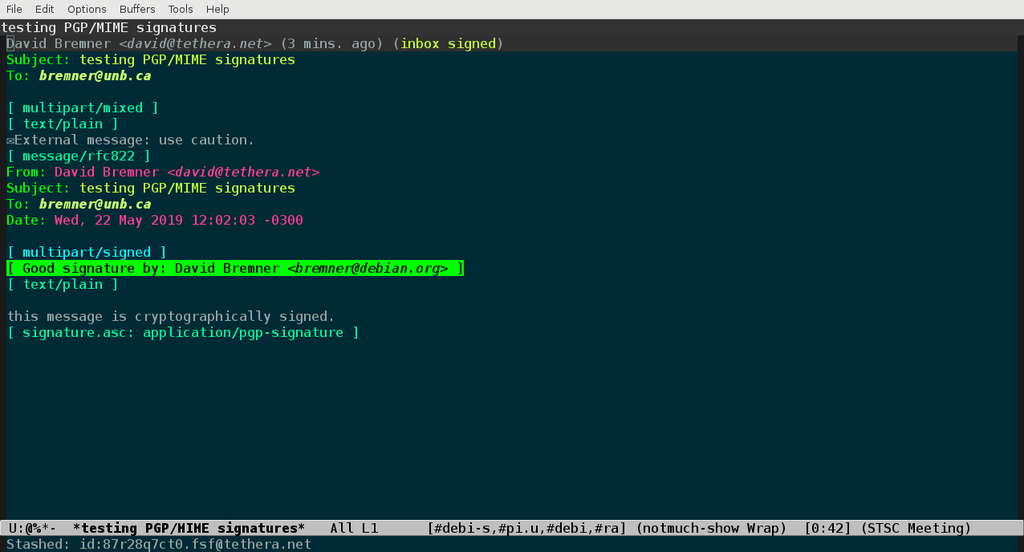

Figure 2: View with added banner

Footnotes:

On Multics, when I was a high school student

IETF Requests for Comments, which define most of the standards used by email systems.

possibly overcounting some spam as UNB originating email

In case it's not obvious dear reader, communicating with the world outside UNB is part of my job.

Some important projects function exclusively that way. See https://git-send-email.io/ for more information.

Mail Transfer Agent

Created: 2019-05-22 Wed 17:04

Emacs

2018-07-23

- NMUed cdargs

- NMUed silversearcher-ag-el

- Uploaded the partially unbundled emacs-goodies-el to Debian unstable

- packaged and uploaded graphviz-dot-mode

2018-07-24

- packaged and uploaded boxquote-el

- uploaded apache-mode-el

- Closed bugs in graphviz-dot-mode that were fixed by the new version.

- filed lintian bug about empty source package fields

2018-07-25

- packaged and uploaded emacs-session

- worked on sponsoring tabbar-el

2018-07-25

- uploaded dh-make-elpa

Notmuch

2018-07-2[23]

Wrote patch series to fix bug noticed by seanw while (seanw was) working working on a package inspired by policy workflow.

2018-07-25

- Finished reviewing a patch series from dkg about protected headers.

2018-07-26

Helped sean w find right config option for his bug report

Reviewed change proposal from aminb, suggested some issues to watch out for.

2018-07-27

- Add test for threading issue.

Nullmailer

2018-07-25

- uploaded nullmailer backport

2018-07-26

- add "envelopefilter" feature to remotes in nullmailer-ssh

Perl

2018-07-23

- Tried to figure out what documented BibTeX syntax is.

- Looked at BibTeX source.

- Ran away.

2018-07-24

- Forwarded #704527 to https://rt.cpan.org/Ticket/Display.html?id=125914

2018-07-25

- Uploaded libemail-abstract-perl to fix Vcs-* urls

- Updated debhelper compat and Standards-Version for libemail-thread-perl

- Uploaded libemail-thread-perl

2018-07-27

- fixed RC bug #904727 (blocking for perl transition)

Policy and procedures

2018-07-22

- seconded #459427

2018-07-23

- seconded #813471

- seconded #628515

2018-07-25

- read and discussed draft of salvaging policy with Tobi

2018-07-26

- Discussed policy bug about short form License and License-Grant

2018-07-27

- worked with Tobi on salvaging proposal

Introduction

Debian is currently collecting buildinfo but they are not very conveniently searchable. Eventually Chris Lamb's buildinfo.debian.net may solve this problem, but in the mean time, I decided to see how practical indexing the full set of buildinfo files is with sqlite.

Hack

First you need a copy of the buildinfo files. This is currently about 2.6G, and unfortunately you need to be a debian developer to fetch it.

$ rsync -avz mirror.ftp-master.debian.org:/srv/ftp-master.debian.org/buildinfo .Indexing takes about 15 minutes on my 5 year old machine (with an SSD). If you index all dependencies, you get a database of about 4G, probably because of my natural genius for database design. Restricting to debhelper and dh-elpa, it's about 17M.

$ python3 index.pyYou need at least

python3-debianinstalledNow you can do queries like

$ sqlite3 depends.sqlite "select * from depends where depend='dh-elpa' and depend_version<='0106'"where 0106 is some adhoc normalization of 1.6

Conclusions

The version number hackery is pretty fragile, but good enough for my current purposes. A more serious limitation is that I don't currently have a nice (and you see how generous my definition of nice is) way of limiting to builds currently available e.g. in Debian unstable.

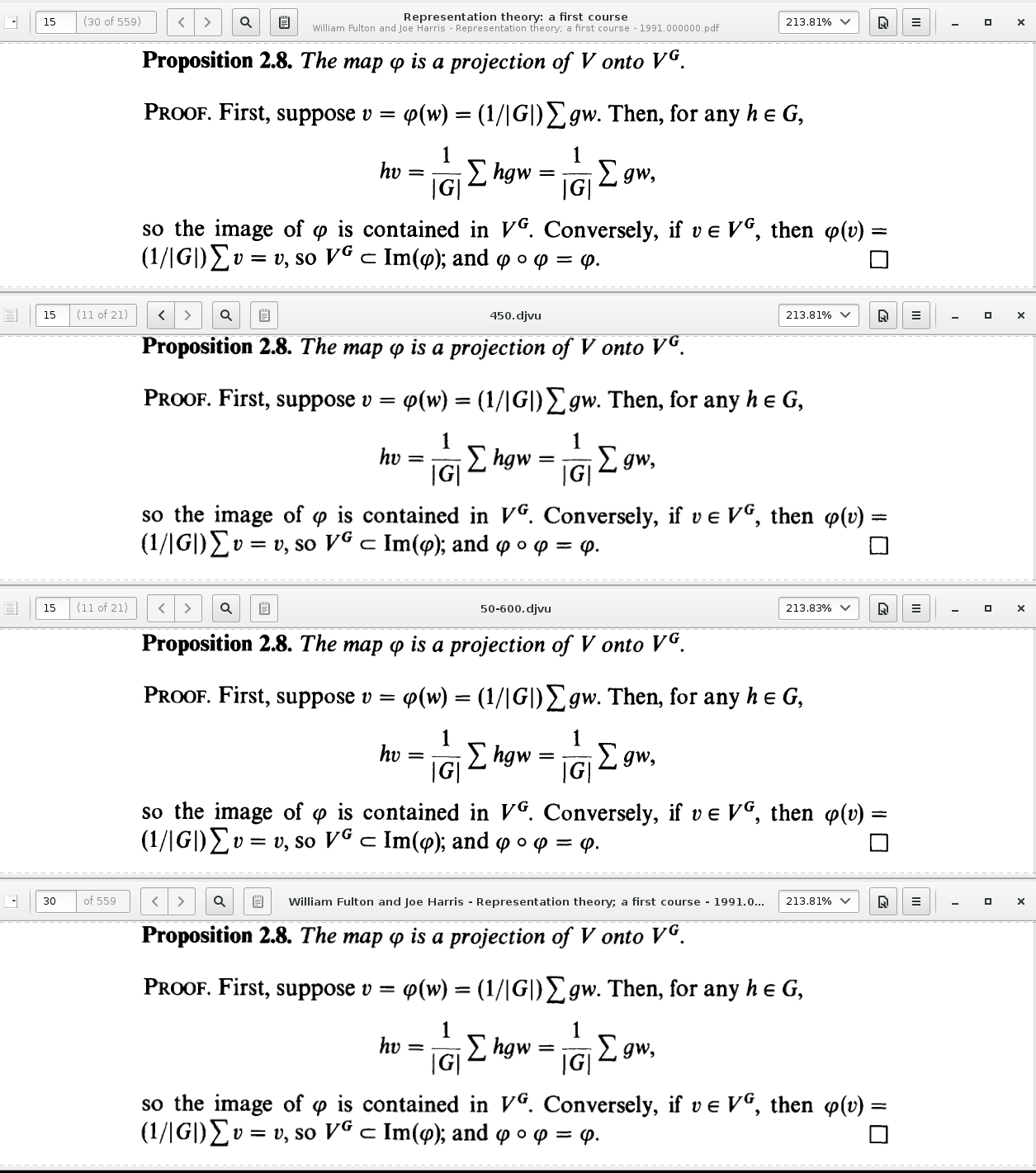

Today I was wondering about converting a pdf made from scan of a book

into djvu, hopefully to reduce the size, without too much loss of

quality. My initial experiments with

pdf2djvu were a bit

discouraging, so I invested some time building

gsdjvu in order to be able

to run djvudigital.

Watching the messages from djvudigital I realized that the reason it

was achieving so much better compression was that it was using black

and white for the foreground layer by default. I also figured out that

the default 300dpi looks crappy since my source document is apparently

600dpi.

I then went back an compared djvudigital to pdf2djvu a bit more

carefully. My not-very-scientific conclusions:

- monochrome at higher resolution is better than coloured foreground

- higher resolution and (a little) lossy beats lower resolution

- at the same resolution,

djvudigitalgives nicer output, but at the same bit rate, comparable results are achievable withpdf2djvu.

Perhaps most compellingly, the output from pdf2djvu has sensible

metadata and is searchable in evince. Even with the --words option,

the output from djvudigital is not. This is possibly related to the

error messages like

Can't build /Identity.Unicode /CIDDecoding resource. See gs_ciddc.ps .

It could well be my fault, because building gsdjvu involved guessing at corrections for several errors.

comparing

GS_VERSIONto 900 doesn't work well, whenGS_VERSIONis a 5 digit number.GS_REVISIONseems to be what's wanted there.extra declaration of struct timeval deleted

-lz added to command to build mkromfs

Some of these issues have to do with building software from 2009 (the

instructions suggestion building with ghostscript 8.64) in a modern

toolchain; others I'm not sure. There was an upload of gsdjvu in

February of 2015, somewhat to my surprise. AT&T has more or less

crippled the project by licensing it under the CPL, which means

binaries are not distributable, hence motivation to fix all the rough

edges is minimal.

| Version | kilobytes per page | position in figure |

|---|---|---|

| Original PDF | 80.9 | top |

| pdf2djvu --dpi=450 | 92.0 | not shown |

| pdf2djvu --monochrome --dpi=450 | 27.5 | second from top |

| pdf2djvu --monochrome --dpi=600 --loss-level=50 | 21.3 | second from bottom |

| djvudigital --dpi=450 | 29.4 | bottom |

After a mildly ridiculous amount of effort I made a bootable-usb key.

I then layered a bash script on top of a perl script on top of gpg. What could possibly go wrong?

#!/bin/bash

infile=$1

keys=$(gpg --with-colons $infile | sed -n 's/^pub//p' | cut -f5 -d: )

gpg --homedir $HOME/.caff/gnupghome --import $infile

caff -R -m no "${keys[*]}"

today=$(date +"%Y-%m-%d")

output="$(pwd)/keys-$today.tar"

for key in ${keys[*]}; do

(cd $HOME/.caff/keys/; tar rvf "$output" $today/$key.mail*)

done

The idea is that keys are exported to files on a networked host, the files are processed on an offline host, and the resulting tarball of mail messages sneakernetted back to the connected host.

Umm. Somehow I thought this would be easier than learning about live-build. Probably I was wrong. There are probably many better tutorials on the web.

Two useful observations: zeroing the key can eliminate mysterious grub errors, and systemd-nspawn is pretty handy. One thing that should have been obvious, but wasn't to me is that it's easier to install grub onto a device outside of any container.

Find device

$ dmesg

Count sectors

# fdisk -l /dev/sdx

Assume that every command after here is dangerous.

Zero it out. This is overkill for a fresh key, but fixed a problem with reusing a stick that had a previous live distro installed on it.

# dd if=/dev/zero of=/dev/sdx bs=1048576 count=$count

Where $count is calculated by dividing the sector count by 2048.

Make file system. There are lots of options. I eventually used parted

# parted

(parted) mklabel msdos

(parted) mkpart primary ext2 1 -1

(parted) set 1 boot on

(parted) quit

Make a file system

# mkfs.ext2 /dev/sdx1

# mount /dev/sdx1 /mnt

Install the base system

# debootstrap --variant=minbase jessie /mnt http://httpredir.debian.org/debian/

Install grub (no chroot needed)

# grub-install --boot-directory /mnt/boot /dev/sdx1

Set a root password

# chroot /mnt

# passwd root

# exit

create up fstab

# blkid -p /dev/sdc1 | cut -f2 -d' ' > /mnt/etc/fstab

Now edit to fix syntax, tell ext2, etc...

Now switch to system-nspawn, to avoid boring bind mounting, etc..

# systemd-nspawn -b -D /mnt

login to the container, install linux-base, linux-image-amd64, grub-pc

EDIT: fixed block size of dd, based on suggestion of tg. EDIT2: fixed count to match block size

I've been a mostly happy Thinkpad owner for almost 15 years. My first Thinkpad was a 570, followed by an X40, an X61s, and an X220. There might have been one more in there, my archives only go back a decade. Although it's lately gotten harder to buy Thinkpads at UNB as Dell gets better contracts with our purchasing people, I've persevered, mainly because I'm used to the Trackpoint, and I like the availability of hardware service manuals. Overall I've been pleased with the engineering of the X series.

Over the last few days I learned about the installation of the superfish malware on new Lenovo systems, and Lenovo's completely inadequate response to the revelation. I don't use Windows, so this malware would not have directly affected me (unless I had the misfortune to use this system to download installation media for some GNU/Linux distribution). Nonetheless, how can I trust the firmware installed by a company that seems to value its users' security and privacy so little?

Unless Lenovo can show some sign of understanding the gravity of this mistake, and undertake not to repeat it, then I'm afraid you will be joining Sony on my list of vendors I used to consider buying from. Sure, it's only a gross income loss of $500 a year or so, if you assume I'm alone in this reaction. I don't think I'm alone in being disgusted and angered by this incident.

(Debian) packaging and Git.

The big picture is as follows. In my view, the most natural way to

work on a packaging project in version control [1] is to have an

upstream branch which either tracks upstream Git/Hg/Svn, or imports of

tarballs (or some combination thereof, and a Debian branch where both

modifications to upstream source and commits to stuff in ./debian are

added [2]. Deviations from this are mainly motivated by a desire to

export source packages, a version control neutral interchange format

that still preserves the distinction between upstream source and

distro modifications. Of course, if you're happy with the distro

modifications as one big diff, then you can stop reading now gitpkg

$debian_branch $upstream_branch and you're done. The other easy case

is if your changes don't touch upstream; then 3.0 (quilt) packages

work nicely with ./debian in a separate tarball.

So the tension is between my preferred integration style, and making

source packages with changes to upstream source organized in some

nice way, preferably in logical patches like, uh, commits in a

version control system. At some point we may be able use some form of

version control repo as a source package, but the issues with that are

for another blog post. At the moment then we are stuck with

trying bridge the gap between a git repository and a 3.0 (quilt)

source package. If you don't know the details of Debian packaging,

just imagine a patch series like you would generate with git

format-patch or apply with (surprise) quilt.

From Git to Quilt.

The most obvious (and the most common) way to bridge the gap between

git and quilt is to export patches manually (or using a helper like

gbp-pq) and commit them to the packaging repository. This has the

advantage of not forcing anyone to use git or specialized helpers to

collaborate on the package. On the other hand it's quite far from the

vision of using git (or your favourite VCS) to do the integration that

I started with.

The next level of sophistication is to maintain a branch of

upstream-modifying commits. Roughly speaking, this is the approach

taken by git-dpm, by gitpkg, and with some additional friction

from manually importing and exporting the patches, by gbp-pq. There

are some issues with rebasing a branch of patches, mainly it seems to

rely on one person at a time working on the patch branch, and it

forces the use of specialized tools or workflows. Nonetheless, both

git-dpm and gitpkg support this mode of working reasonably well [3].

Lately I've been working on exporting patches from (an immutable) git

history. My initial experiments with marking commits with git notes

more or less worked [4]. I put this on the back-burner for two

reasons, first sharing git notes is still not very well supported by

git itself [5], and second Gitpkg maintainer Ron Lee convinced me to

automagically pick out what patches to export. Ron's motivation (as I

understand it) is to have tools which work on any git repository

without extra metadata in the form of notes.

Linearizing History on the fly.

After a few iterations, I arrived at the following specification.

The user supplies two refs upstream and head. upstream should be suitable for export as a

.orig.tar.gzfile [6], and it should be an ancestor of head.At source package build time, we want to construct a series of patches that

- Is guaranteed to apply to upstream

- Produces the same work tree as head, outside

./debian - Does not touch

./debian - As much as possible, matches the git history from upstream to head.

Condition (4) suggests we want something roughly like git

format-patch upstream..head, removing those patches which are

only about Debian packaging. Because of (3), we have to be a bit

careful about commits that touch upstream and ./debian. We also

want to avoid outputting patches that have been applied (or worse

partially applied) upstream. git patch-id can help identify

cherry-picked patches, but not partial application.

Eventually I arrived at the following strategy.

Use git-filter-branch to construct a copy of the history upstream..head with ./debian (and for technical reasons .pc) excised.

Filter these commits to remove e.g. those that are present exactly upstream, or those that introduces no changes, or changes unrepresentable in a patch.

Try to revert the remaining commits, in reverse order. The idea here is twofold. First, a patch that occurs twice in history because of merging will only revert the most recent one, allowing earlier copies to be skipped. Second, the state of the temporary branch after all successful reverts represents the difference from upstream not accounted for by any patch.

Generate a "fixup patch" accounting for any remaining differences, to be applied before any if the "nice" patches.

Cherry-pick each "nice" patch on top of the fixup patch, to ensure we have a linear history that can be exported to quilt. If any of these cherry-picks fail, abort the export.

Yep, it seems over-complicated to me too.

TL;DR: Show me the code.

You can clone my current version from

git://pivot.cs.unb.ca/gitpkg.git

This provides a script "git-debcherry" which does the history linearization discussed above. In order to test out how/if this works in your repository, you could run

git-debcherry --stat $UPSTREAM

For actual use, you probably want to use something like

git-debcherry -o debian/patches

There is a hook in hooks/debcherry-deb-export-hook that does this at

source package export time.

I'm aware this is not that fast; it does several expensive operations. On the other hand, you know what Don Knuth says about premature optimization, so I'm more interested in reports of when it does and doesn't work. In addition to crashing, generating multi-megabyte "fixup patch" probably counts as failure.

Notes

This first part doesn't seem too Debian or git specific to me, but I don't know much concrete about other packaging workflows or other version control systems.

Another variation is to have a patched upstream branch and merge that into the Debian packaging branch. The trade-off here that you can simplify the patch export process a bit, but the repo needs to have taken this disciplined approach from the beginning.

git-dpm merges the patched upstream into the Debian branch. This makes the history a bit messier, but seems to be more robust. I've been thinking about trying this out (semi-manually) for gitpkg.

See e.g. exporting. Although I did not then know the many surprising and horrible things people do in packaging histories, so it probably didn't work as well as I thought it did.

It's doable, but one ends up spending about a bunch lines of code on duplicating basic git functionality; e.g. there is no real support for tags of notes.

Since as far as I know quilt has no way of deleting files except to list the content, this means in particular exporting upstream should yield a DFSG Free source tree.

In April of 2012 I bought a ColorHug colorimeter. I got a bit discouraged when the first thing I realized was that one of my monitors needed replacing, and put the the colorhug in a drawer until today.

With quite a lot of help and encouragment from Pascal de Bruijn, I finally got it going. Pascal has written an informative blog post on color management. That's a good place to look for background. This is more of a "write down the commands so I don't forget" sort of blog post, but it might help somebody else trying to calibrate their monitor using argyll on the command line. I'm not running gnome, so using gnome color manager turns out to be a bit of a hassle.

I run Debian Wheezy on this machine, and I'll mention the packages I used, even though I didn't install most of them today.

Find the masking tape, and tear off a long enough strip to hold the ColorHug on the monitor. This is probably the real reason I gave up last time; it takes about 45minutes to run the calibration, and I lack the attention span/upper-arm-strength to hold the sensor up for that long. Apparently new ColorHugs are shipping with some elastic.

Update the firmware on the colorhug. This is a gui-wizard kindof thing.

% apt-get install colorhug-client % colorhug-flashSet the monitor to factory defaults. On this ASUS PA238QR, that is brightness 100, contrast 80, R=G=B=100. I adjusted the brightness down to about 70; 100 is kindof eye-burning IMHO.

Figure out which display is which; I have two monitors.

% dispwin -\?Look under "-d n"

Do the calibration. This is really verbatim from Pascal, except I added the

ENABLE_COLORHUG=trueand-d 2bits.% apt-get install argyll % ENABLE_COLORHUG=true dispcal -v -d 2 -m -q m -y l -t 6500 -g 2.2 test % targen -v -d 3 -G -f 128 test % ENABLE_COLORHUG=true dispread -v -d 2 -y l -k test.cal test % colprof -v -A "make" -M "model" -D "make model desc" -C "copyright" -q m -a G testLoad the profile

% dispwin -d 2 -I test.iccIt seems this only loads the x property

_ICC_PROFILE_1instead of_ICC_PROFILE; whether this works for a particular application seems to be not 100% guaranteed. It seems ok for darktable and gimp.

It's spring, and young(ish?) hackers' minds turn to OpenCL. What is the state of things? I haven't the faintest idea, but I thought I'd try to share what I find out. So far, just some links. Details to be filled in later, particularly if you, dear reader, tell them to me.

Specification

LLVM based front ends

Mesa backend

Rumours/hopes of something working in mesa 8.1?

- r600g is merged into master as of this writing.

- clover

Other projects

- SNU This project seems be only for Cell/ARM/DSP at the moment. Although they make you register to download, it looks like it is LGPL.

I've been experimenting with a new packaging tool/workflow based on marking certain commits on my integration branch for export as quilt patches. In this post I'll walk though converting the package nauty to this workflow.

Add a control file for the gitpkg export hook, and enable the hook: (the package is already 3.0 (quilt))

% echo ':debpatch: upstream..master' > debian/source/git-patches % git add debian/source/git-patches && git commit -m'add control file for gitpkg quilt export' % git config gitpkg.deb-export-hook /usr/share/gitpkg/hooks/quilt-patches-deb-export-hookThis says that all commits reachable from master but not from upstream should be checked for possible export as quilt patches.

This package was previously maintained in the "recommend topgit style" with the patches checked in on a seperate branch, so grab a copy.

% git archive --prefix=nauty/ build | (cd /tmp ; tar xvf -)More conventional git-buildpackage style packaging would not need this step.

Import the patches. If everything is perfect, you can use qit quiltimport, but I have several patches not listed in "series", and quiltimport ignores series, so I have to do things by hand.

% git am /tmp/nauty/debian/patches/feature/shlib.diffMark my imported patch for export.

% git debpatch +export HEADgit debpatch listoutputs the followingafb2c20 feature/shlib Export: true makefile.in | 241 +++++++++++++++++++++++++++++++++-------------------------- 1 files changed, 136 insertions(+), 105 deletions(-)The first line is the subject line of the patch, followed by any notes from debpatch (in this case, just 'Export: true'), followed by a diffstat. If more patches were marked, this would be repeated for each one.

In this case I notice subject line is kindof cryptic and decide to amend.

git commit --amendgit debpatch liststill shows the same thing, which highlights a fundemental aspect of git notes: they attach to commits. And I just made a new commit, sogit debpatch -export afb2c20 git debpatch +export HEADNow

git debpatch listlooks ok, so we trygit debpatch exportas a dry run. In debian/patches we have0001-makefile.in-Support-building-a-shared-library-and-st.patch series

That looks good. Now we are not going to commit this, since one of our overall goal is to avoid commiting patches. To clean up the export,

rm -rf debian/patchesgitpkg masterexports a source package, and because I enabled the appropriate hook, I have the following% tar tvf ../deb-packages/nauty/nauty_2.4r2-1.debian.tar.gz | grep debian/patches drwxr-xr-x 0/0 0 2012-03-13 23:08 debian/patches/ -rw-r--r-- 0/0 143 2012-03-13 23:08 debian/patches/series -rw-r--r-- 0/0 14399 2012-03-13 23:08 debian/patches/0001-makefile.in-Support-building-a-shared-library-and-st.patchNote that these patches are exported straight from git.

I'm done for now so

git push git debpatch push

the second command is needed to push the debpatch notes metadata to the origin. There is a corresponding fetch, merge, and pull commands.

More info

Example package: bibutils In this package, I was already maintaining the upstream patches merged into my master branch; I retroactively added the quilt export.

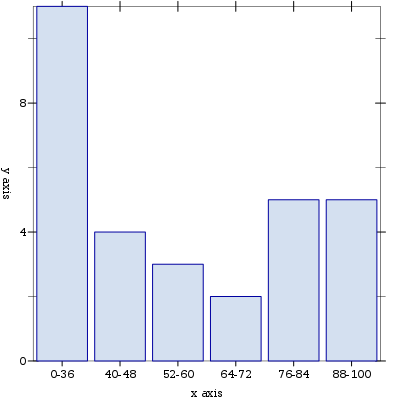

I have been in the habit of using R to make e.g. histograms of test scores in my courses. The main problem is that I don't really need (or am too ignorant to know that I need) the vast statistical powers of R, and I use it rarely enough that its always a bit of a struggle to get the plot I want.

racket is a programming language in the scheme family, distinguished from some of its more spartan cousins by its "batteries included" attitude.

I recently stumbled upon the PLoT graph (information visualization kind, not networks) plotting module and was pretty impressed with the Snazzy 3D Pictures.

So this time I decided try using PLoT for my chores. It worked out pretty well; of course I am not very ambitious. Compared to using R, I had to do a bit more work in data preparation, but it was faster to write the Racket than to get R to do the work for me (again, probably a matter of relative familiarity).

#lang racket/base

(require racket/list)

(require plot)

(define marks (build-list 30 (lambda (n) (random 25))))

(define out-of 25)

(define breaks '((0 9) (10 12) (13 15) (16 18) (19 21) (22 25)))

(define (per-cent n)

(ceiling (* 100 (/ n out-of))))

(define (label l)

(format "~a-~a" (per-cent (first l)) (per-cent (second l))))

(define (buckets l)

(let ((sorted (sort l <)))

(for/list ([b breaks])

(vector (label b)

(count (lambda (x) (and

(<= x ( second b))

(>= x ( first b))))

marks)))))

(plot

(list

(discrete-histogram

(buckets marks)))

#:out-file "racket-hist.png")

It seems kind of unfair, given the name, but duplicity really doesn't like to be run in parallel. This means that some naive admin (not me of course, but uh, this guy I know ;) ) who writes a crontab

@daily duplicity incr $ARGS $SRC $DEST

@weekly duplicity full $ARGS $SRC $DEST

is in for a nasty surprise when both fire at the same time. In particular one of them will terminate with the not very helpful.

AttributeError: BackupChain instance has no attribute 'archive_dir'

After some preliminary reading of mailing list archives, I decided to

delete ~/.cache/duplicity on the client and try again. This was

not a good move.

- It didn't fix the problem

- Resyncing from the server required decrypting some information, which required access to the gpg private key.

Now for me, one of the main motivations for using duplicity was that I could encrypt to a key without having the private key accessible. Luckily the following crazy hack works.

A host where the gpg private key is accessible, delete the

~/.cache/duplicity, and perform some arbitrary duplicity operation. I didduplicity clean $DEST

UPDATE: for this hack to work, at least with s3 backend, you need to specifify the same arguments. In particular omitting --s3-use-new-style will cause mysterious failures. Also, --use-agent may help.

- Now rsync the ./duplicity/cache directory to the backup client.

Now at first you will be depressed, because the problem isn't fixed yet. What you need to do is go onto the backup server (in my case Amazon s3) and delete one of the backups (in my case, the incremental one). Of course, if you are the kind of reader who skips to the end, probably just doing this will fix the problem and you can avoid the hijinks.

And, uh, some kind of locking would probably be a good plan... For now I just stagger the cron jobs.

As of version 0.17, gitpkg ships with a hook called quilt-patches-deb-export-hook. This can be used to export patches from git at the time of creating the source package.

This is controlled by a file debian/source/git-patches.

Each line contains a range suitable for passing to git-format-patch(1).

The variables UPSTREAM_VERSION and DEB_VERSION are replaced with

values taken from debian/changelog. Note that $UPSTREAM_VERSION is

the first part of $DEB_VERSION

An example is

upstream/$UPSTREAM_VERSION..patches/$DEB_VERSION

upstream/$UPSTREAM_VERSION..embedded-libs/$DEB_VERSION

This tells gitpkg to export the given two ranges of commits to debian/patches while generating the source package. Each commit becomes a patch in debian/patches, with names generated from the commit messages. In this example, we get 5 patches from the two ranges.

0001-expand-pattern-in-no-java-rule.patch

0002-fix-dd_free_global_constants.patch

0003-Backported-patch-for-CPlusPlus-name-mangling-guesser.patch

0004-Use-system-copy-of-nauty-in-apps-graph.patch

0005-Comment-out-jreality-installation.patch

Thanks to the wonders of 3.0 (quilt) packages, these are applied

when the source package is unpacked.

Caveats.

Current lintian complains bitterly about debian/source/git-patches. This should be fixed with the next upload.

It's a bit dangerous if you checkout such package from git, don't read any of the documentation, and build with debuild or something similar, since you won't get the patches applied. There is a proposed check that catches most of such booboos. You could also cause the build to fail if the same error is detected; this a matter of personal taste I guess.

I use a lot of code in my lectures, in many different programming languages.

I use highlight to generate HTML (via ikiwiki) for web pages.

For class presentations, I mostly use the beamer LaTeX class.

In order to simplify generating overlays, I wrote a perl script hl-beamer.pl to preprocess source code. An htmlification of the documention/man-page follows.

NAME

hl-beamer - Preprocessor for hightlight to generate beamer overlays.

SYNOPSIS

hl-beamer -c // InstructiveExample.java | highlight -S java -O latex > figure1.tex

DESCRIPTION

hl-beamer looks for single line comments (with syntax specified by -c) These comments can start with @ followed by some codes to specify beamer overlays or sections (just chunks of text which can be selectively included).

OPTIONS

-c commentstring Start of single line comments

-k section1,section2 List of sections to keep (see @( below).

-s number strip number spaces from the front of every line (tabs are first converted to spaces using Text::Tabs::expand)

-S strip all directive comments.

CODES

@( section named section. Can be nested. Pass -k section to include in output. The same name can (usefully) be re-used. Sections omit and comment are omitted by default.

@) close most recent section.

@< [overlaytype] [overlayspec] define a beamer overlay. overlaytype defaults to visibleenv if not specified. overlayspec defaults to +- if not specified.

@> close most recent overlay

EXAMPLE

Example input follows. I would probably process this with

hl-beamer -s 4 -k encodeInner

Sample Input

// @( omit

import java.io.BufferedReader;

import java.io.FileReader;

import java.io.IOException;

import java.io.Serializable;

import java.util.Scanner;

// @)

// @( encoderInner

private int findRun(int inRow, int startCol){

// @<

int value=bits[inRow][startCol];

int cursor=startCol;

// @>

// @<

while(cursor<columns &&

bits[inRow][cursor] == value)

//@<

cursor++;

//@>

// @>

// @<

return cursor-1;

// @>

}

// @)

BUGS AND LIMITATIONS

Currently overlaytype and section must consist of upper and lower case letters and or underscores. This is basically pure sloth on the part of the author.

Tabs are always expanded to spaces.

I recently decided to try maintaining a Debian package (bibutils) without committing any patches to Git. One of the disadvantages of this approach is that the patches for upstream are not nicely sorted out in ./debian/patches. I decided to write a little tool to sort out which commits should be sent to upstream. I'm not too happy about the length of it, or the name "git-classify", but I'm posting in case someone has some suggestions. Or maybe somebody finds this useful.

#!/usr/bin/perl

use strict;

my $upstreamonly=0;

if ($ARGV[0] eq "-u"){

$upstreamonly=1;

shift (@ARGV);

}

open(GIT,"git log -z --format=\"%n%x00%H\" --name-only @ARGV|");

# throw away blank line at the beginning.

$_=<GIT>;

my $sha="";

LINE: while(<GIT>){

chomp();

next LINE if (m/^\s*$/);

if (m/^\x0([0-9a-fA-F]+)/){

$sha=$1;

} else {

my $debian=0;

my $upstream=0;

foreach my $word ( split("\x00",$_) ) {

if ($word=~m@^debian/@) {

$debian++;

} elsif (length($word)>0) {

$upstream++;

}

}

if (!$upstreamonly){

print "$sha\t";

print "MIXED" if ($upstream>0 && $debian>0);

print "upstream" if ($upstream>0 && $debian==0);

print "debian" if ($upstream==0 && $debian>0);

print "\n";

} else {

print "$sha\n" if ($upstream>0 && $debian==0);

}

}

}

=pod

=head1 Name

git-classify - Classify commits as upstream, debian, or MIXED

=head1 Synopsis

=over

=item B<git classify> [I<-u>] [I<arguments for git-log>]

=back

=head1 Description

Classify a range of commits (specified as for git-log) as I<upstream>

(touching only files outside ./debian), I<debian> (touching files only

inside ./debian) or I<MIXED>. Presumably these last kind are to be

discouraged.

=head2 Options

=over

=item B<-u> output only the SHA1 hashes of upstream commits (as

defined above).

=back

=head1 Examples

Generate all likely patches to send upstream

git classify -u $SHA..HEAD | xargs -L1 git format-patch -1

Before I discovered you could just point your browser at

http://search.cpan.org/meta/Dist-Name-0.007/META.json to automagically

convert META.yml and META.json, I wrote a script to do it.

Anyway, it goes with my "I hate the cloud" prejudices :).

use CPAN::Meta;

use CPAN::Meta::Converter;

use Data::Dumper;

my $meta = CPAN::Meta->load_file("META.yml");

my $cmc = CPAN::Meta::Converter->new($meta);

my $new=CPAN::Meta->new($cmc->convert(version=>"2"));

$new->save("META.json");

It turns out that pdfedit is pretty good at extracting text from pdf files. Here is a script I wrote to do that in batch mode.

#!/bin/sh

# Print the text from a pdf document on stdout

# Copyright: (c) 2006-2010 PDFedit team <http://sourceforge.net/projects/pdfedit>

# Copyright: (c) 2010, David Bremner <david@tethera.net>

# Licensed under version 2 or later of the GNU GPL

set -e

if [ $# -lt 1 ]; then

echo usage: $0 file [pageSep]

exit 1

fi

#!/bin/sh

# Print the text from a pdf document on stdout

# Copyright: © 2006-2010 PDFedit team <http://sourceforge.net/projects/pdfedit>

# Copyright: © 2010, David Bremner <david@tethera.net>

# Licensed under version 2 or later of the GNU GPL

set -e

if [ $# -lt 1 ]; then

echo usage: $0 file [pageSep]

exit 1

fi

/usr/bin/pdfedit -console -eval '

function onConsoleStart() {

var inName = takeParameter();

var pageSep = takeParameter();

var doc = loadPdf(inName,false);

pages=doc.getPageCount();

for (i=1;i<=pages;i++) {

pg=doc.getPage(i);

text=pg.getText();

print(text);

print("\n");

print(pageSep);

}

}

' $1 $2

Yeah, I wish #!/usr/bin/pdfedit worked too. Thanks to Aaron M Ucko for pointing out that

-eval could replace the use of a temporary file.

Oh, and pdfedit will be even better when the authors release a new version that fixes truncating wide text

Dear Julien;

After using notmuch for a while, I came to the conclusion that tags are mostly irelevant. What is a game changer for me is fast global search. And yes, I changed from using dovecot search, so I mean much faster than that. Actually I remember that from the Human Computer Interface course that I took in the early Neolithic era that speed of response has been measured as a key factor in interfaces, so maybe it isn't just me.

Of course there are tradeoffs, some of which you mention.

David

What is it?

I was a bit daunted by the number of mails from people signing my gpg keys at debconf, so I wrote a script to mass process them. The workflow, for those of you using notmuch is as follows:

$ notmuch show --format=mbox tag:keysign > sigs.mbox

$ ffac sigs.mbox

where previously I have tagged keysigning emails as "keysign" if I want to import them. You also need to run gpg-agent, since I was too lazy/scared to deal with passphrases.

This will import them into a keyring in ~/.ffac; uploading is still

manual using something like

$ gpg --homedir=$HOME/.ffac --send-keys $keyid

UPDATE Before you upload all of those shiny signatures, you might

want to use the included script fetch-sig-keys to add the

corresponding keys to the temporary keyring in ~/.ffac.

After

$ fetch-sig-keys $keyid

then

$ gpg --homedir ~/.ffac --list-sigs $keyid

should have a UID associated with each signature.

How do I use it

At the moment this is has been tested once or twice by one

person. More testing would be great, but be warned this is pre-release

software until you can install it with apt-get.

Get the script from

$ git clone git://pivot.cs.unb.ca/git/ffac.git

Get a patched version of Mail::GnuPG that supports

gpg-agent; hopefully this will make it upstream, but for now,$ git clone git://pivot.cs.unb.ca/git/mail-gnupg.git

I have a patched version of the debian package that I could make available if there was interest.

Install the other dependencies.

# apt-get install libmime-parser-perl libemail-folder-perl

UPDATED

2011/07/29 libmail-gnupg-perl in Debian supports gpg-agent for some time now.

racket (previously known as plt-scheme) is an

interpreter/JIT-compiler/development environment with about 6 years of

subversion history in a converted git repo. Debian packaging has been

done in subversion, with only the contents of ./debian in version

control. I wanted to merge these into a single git repository.

The first step is to create a repo and fetch the relevant history.

TMPDIR=/var/tmp

export TMPDIR

ME=`readlink -f $0`

AUTHORS=`dirname $ME`/authors

mkdir racket && cd racket && git init

git remote add racket git://git.racket-lang.org/plt

git fetch --tags racket

git config merge.renameLimit 10000

git svn init --stdlayout svn://svn.debian.org/svn/pkg-plt-scheme/plt-scheme/

git svn fetch -A$AUTHORS

git branch debian

A couple points to note:

At some point there were huge numbers of renames when then the project renamed itself, hense the setting for

merge.renameLimitNote the use of an authors file to make sure the author names and emails are reasonable in the imported history.

git svn creates a branch master, which we will eventually forcibly overwrite; we stash that branch as

debianfor later use.

Now a couple complications arose about upstream's git repo.

Upstream releases seperate source tarballs for unix, mac, and windows. Each of these is constructed by deleting a large number of files from version control, and occasionally some last minute fiddling with README files and so on.

The history of the release tags is not completely linear. For example,

rocinante:~/projects/racket (git-svn)-[master]-% git diff --shortstat v4.2.4 `git merge-base v4.2.4 v5.0`

48 files changed, 242 insertions(+), 393 deletions(-)

rocinante:~/projects/racket (git-svn)-[master]-% git diff --shortstat v4.2.1 `git merge-base v4.2.1 v4.2.4`

76 files changed, 642 insertions(+), 1485 deletions(-)

The combination made my straight forward attempt at constructing a history synched with release tarballs generate many conflicts. I ended up importing each tarball on a temporary branch, and the merges went smoother. Note also the use of "git merge -s recursive -X theirs" to resolve conflicts in favour of the new upstream version.

The repetitive bits of the merge are collected as shell functions.

import_tgz() {

if [ -f $1 ]; then

git clean -fxd;

git ls-files -z | xargs -0 rm -f;

tar --strip-components=1 -zxvf $1 ;

git add -A;

git commit -m'Importing '`basename $1`;

else

echo "missing tarball $1";

fi;

}

do_merge() {

version=$1

git checkout -b v$version-tarball v$version

import_tgz ../plt-scheme_$version.orig.tar.gz

git checkout upstream

git merge -s recursive -X theirs v$version-tarball

}

post_merge() {

version=$1

git tag -f upstream/$version

pristine-tar commit ../plt-scheme_$version.orig.tar.gz

git branch -d v$version-tarball

}

The entire merge script is here. A typical step looks like

do_merge 5.0

git rm collects/tests/stepper/automatic-tests.ss

git add `git status -s | egrep ^UA | cut -f2 -d' '`

git checkout v5.0-tarball doc/release-notes/teachpack/HISTORY.txt

git rm readme.txt

git add collects/tests/web-server/info.rkt

git commit -m'Resolve conflicts from new upstream version 5.0'

post_merge 5.0

Finally, we have the comparatively easy task of merging the upstream

and Debian branches. In one or two places git was confused by all of

the copying and renaming of files and I had to manually fix things up

with git rm.

cd racket || /bin/true

set -e

git checkout debian

git tag -f packaging/4.0.1-2 `git svn find-rev r98`

git tag -f packaging/4.2.1-1 `git svn find-rev r113`

git tag -f packaging/4.2.4-2 `git svn find-rev r126`

git branch -f master upstream/4.0.1

git checkout master

git merge packaging/4.0.1-2

git tag -f debian/4.0.1-2

git merge upstream/4.2.1

git merge packaging/4.2.1-1

git tag -f debian/4.2.1-1

git merge upstream/4.2.4

git merge packaging/4.2.4-2

git rm collects/tests/stxclass/more-tests.ss && git commit -m'fix false rename detection'

git tag -f debian/4.2.4-2

git merge -s recursive -X theirs upstream/5.0

git rm collects/tests/web-server/info.rkt

git commit -m 'Merge upstream 5.0'

I'm thinking about distributed issue tracking systems that play nice with git. I don't care about other version control systems anymore :). I also prefer command line interfaces, because as commentators on the blog have mentioned, I'm a Luddite (in the imprecise, slang sense).

So far I have found a few projects, and tried to guess how much of a going concern they are.

Git Specific

ticgit I don't know if this github at its best or worst, but the original project seems dormant and there are several forks. According the original author, this one is probably the best.

git-issues Originally a rewrite of ticgit in python, it now claims to be defunct.

VCS Agnostic

ditz Despite my not caring about other VCSs, ditz is VCS agnostic, just making files. Seems active.

cil takes a similar approach to ditz, is written in Perl rather than Ruby, and should release again any day now (hint, hint).

milli is a minimalist approach to the same theme.

Sortof VCS Agnostic

bugs everywhere is written in python. Works with Arch, Bazaar, Darcs, Git, and Mercurial. There seems to some on-going development activity.

simple defects has Git and Darcs integration. It seems active. It's written by bestpractical people, so no surprise it is written in Perl.

Updated

- 2010-10-01 Note activity for bugs everywhere

- 2012-06-22 Note git-issues self description as defunct. Update link for cil.

I'm collecting information (or at least links) about functional programming languages on the the JVM. I'm going to intentionally leave "functional programming language" undefined here, so that people can have fun debating :).

Functional Languages

Languages and Libraries with functional features

Projects and rumours.

There has been discussion about making the jhc target the jvm. They both start with 'j', so that is hopeful.

Java itself may (soon? eventually?) support closures

You have a gitolite install on host $MASTER, and you want a mirror on $SLAVE. Here is one way to do that. $CLIENT is your workstation, that need not be the same as $MASTER or $SLAVE.

On $CLIENT, install gitolite on $SLAVE. It is ok to re-use your gitolite admin key here, but make sure you have both public and private key in .ssh, or confusion ensues. Note that when gitolite asks you to double check the "host gitolite" ssh stanza, you probably want to change hostname to $SLAVE, at least temporarily (if not, at least the checkout of the gitolite-admin repo will fail) You may want to copy .gitolite.rc from $MASTER when gitolite fires up an editor.

On $CLIENT copy the "gitolite" stanza of .ssh/config to gitolite-mirror to a stanza called e.g. gitolite-slave fix the hostname of the gitolite stanza so it points to $MASTER again.

On $MASTER, as gitolite user, make passphraseless ssh-key. Probably you should call it something like 'mirror'

Still on $MASTER. Add a stanza like the following to $gitolite_user/.ssh/config

host gitolite-mirror hostname $SLAVE identityfile ~/.ssh/mirrorrun

ssh gitolite-mirrorat least once to test and set up any "know_hosts" file.On $CLIENT change directory to a checkout of gitolite admin from $MASTER. Make sure it is up to date with respect origin

git pullEdit .git/config (or, in very recent git, use

git remote seturl --push --add) so that remote origin looks likefetch = +refs/heads/*:refs/remotes/origin/* url = gitolite:gitolite-admin pushurl = gitolite:gitolite-admin pushurl = gitolite-slave:gitolite-adminAdd a stanza

repo @all RW+ = mirror

to the bottom of your gitolite.conf Add mirror.pub to keydir.

Now overwrite the gitolite-admin repo on $SLAVE

git push -f

Note that empty repos will be created on $SLAVE for every repo on $MASTER.

The following one line post-update hook to any repos you want mirrored (see the gitolite documentation for how to automate this) You should not modify the post update hook of the gitolite-admin repo.

git push --mirror gitolite-mirror:$GL_REPO.git

Create repos as per normal in the gitolite-admin/conf/gitolite.conf. If you have set the auto post-update hook installation, then each repo will be mirrored. You should only push to $MASTER; any changes pushed to $SLAVE will be overwritten.

Recently I was asked how to read mps (old school linear programming input) files. I couldn't think of a completely off the shelf way to do, so I write a simple c program to use the glpk library.

Of course in general you would want to do something other than print it out again.

So this is in some sense a nadir for shell scripting. 2 lines that do

something out of 111. Mostly cargo-culted from cowpoke by ron, but

much less fancy. rsbuild foo.dsc should do the trick.

#!/bin/sh

# Start a remote sbuild process via ssh. Based on cowpoke from devscripts.

# Copyright (c) 2007-9 Ron <ron@debian.org>

# Copyright (c) David Bremner 2009 <david@tethera.net>

#

# Distributed according to Version 2 or later of the GNU GPL.

BUILDD_HOST=sbuild-host

BUILDD_DIR=var/sbuild #relative to home directory

BUILDD_USER=""

DEBBUILDOPTS="DEB_BUILD_OPTIONS=\"parallel=3\""

BUILDD_ARCH="$(dpkg-architecture -qDEB_BUILD_ARCH 2>/dev/null)"

BUILDD_DIST="default"

usage()

{

cat 1>&2 <<EOF

rsbuild [options] package.dsc

Uploads a Debian source package to a remote host and builds it using sbuild.

The following options are supported:

--arch="arch" Specify the Debian architecture(s) to build for.

--dist="dist" Specify the Debian distribution(s) to build for.

--buildd="host" Specify the remote host to build on.

--buildd-user="name" Specify the remote user to build as.

The current default configuration is:

BUILDD_HOST = $BUILDD_HOST

BUILDD_USER = $BUILDD_USER

BUILDD_ARCH = $BUILDD_ARCH

BUILDD_DIST = $BUILDD_DIST

The expected remote paths are:

BUILDD_DIR = $BUILDD_DIR

sbuild must be configured on the build host. You must have ssh

access to the build host as BUILDD_USER if that is set, else as the

user executing cowpoke or a user specified in your ssh config for

'$BUILDD_HOST'. That user must be able to execute sbuild.

EOF

exit $1

}

PROGNAME="$(basename $0)"

version ()

{

echo \

"This is $PROGNAME, version 0.0.0

This code is copyright 2007-9 by Ron <ron@debian.org>, all rights reserved.

Copyright 2009 by David Bremner <david@tethera.net>, all rights reserved.

This program comes with ABSOLUTELY NO WARRANTY.

You are free to redistribute this code under the terms of the

GNU General Public License, version 2 or later"

exit 0

}

for arg; do

case "$arg" in

--arch=*)

BUILDD_ARCH="${arg#*=}"

;;

--dist=*)

BUILDD_DIST="${arg#*=}"

;;

--buildd=*)

BUILDD_HOST="${arg#*=}"

;;

--buildd-user=*)

BUILDD_USER="${arg#*=}"

;;

--dpkg-opts=*)

DEBBUILDOPTS="DEB_BUILD_OPTIONS=\"${arg#*=}\""

;;

*.dsc)

DSC="$arg"

;;

--help)

usage 0

;;

--version)

version

;;

*)

echo "ERROR: unrecognised option '$arg'"

usage 1

;;

esac

done

dcmd rsync --verbose --checksum $DSC $BUILDD_USER$BUILDD_HOST:$BUILDD_DIR

ssh -t $BUILDD_HOST "cd $BUILDD_DIR && $DEBBUILDOPTS sbuild --arch=$BUILDD_ARCH --dist=$BUILDD_DIST $DSC"

I am currently making a shared library out of some existing C code, for eventual inclusion in Debian. Because the author wasn't thinking about things like ABIs and APIs, the code is not too careful about what symbols it exports, and I decided clean up some of the more obviously private symbols exported.

I wrote the following simple script because I got tired of running grep by hand. If you run it with

grep-symbols symbolfile *.c

It will print the symbols sorted by how many times they occur in the other arguments.

#!/usr/bin/perl

use strict;

use File::Slurp;

my $symfile=shift(@ARGV);

open SYMBOLS, "<$symfile" || die "$!";

# "parse" the symbols file

my %count=();

# skip first line;

$_=<SYMBOLS>;

while(<SYMBOLS>){

chomp();

s/^\s*([^\@]+)\@.*$/$1/;

$count{$_}=0;

}

# check the rest of the command line arguments for matches against symbols. Omega(n^2), sigh.

foreach my $file (@ARGV){

my $string=read_file($file);

foreach my $sym (keys %count){

if ($string =~ m/\b$sym\b/){

$count{$sym}++;

}

}

}

print "Symbol\t Count\n";

foreach my $sym (sort {$count{$a} <=> $count{$b}} (keys %count)){

print "$sym\t$count{$sym}\n";

}

- Updated Thanks to Peter Pöschl for pointing out the file slurp should not be in the inner loop.

So, a few weeks ago I wanted to play play some music. Amarok2 was only playing one track at time. Hmm, rather than fight with it, maybe it is time to investigate alternatives. So here is my story. Mac using friends will probably find this amusing.

minirok segfaults as soon I try to do something #544230

bluemingo seems to only understand mp3's

exaile didn't play m4a (these are ripped with faac, so no DRM) files out of the box. A small amount of googling didn't explain it.

mpd looks cool, but I didn't really want to bother with that amount of setup right now.

Quod Libet also seems to have some configuration issues preventing it from playing m4a's

I hate the interface of Audacious

mocp looks cool, like mpd but easier to set up, but crashes trying to play an m4a file. This looks a lot like #530373

qmmp + xmonad = user interface fail.

juk also seems not to play (or catalog) my m4a's

In the end I went back and had a second look at mpd, and I'm pretty happy with it, just using the command line client mpc right now. I intend to investigate the mingus emacs client for mpd at some point.

An emerging theme is that m4a on Linux is pain.

UPDATED It turns out that one problem was I needed gstreamer0.10-plugins-bad and gstreamer0.10-plugins-really-bad. The latter comes from debian-multimedia.org, and had a file conflict with the former in Debian unstable (bug #544667 apparently just fixed). Grabbing the version from testing made it work. This fixed at least rhythmbox, exhaile and quodlibet. Thanks to Tim-Philipp Müller for the solution.

I guess the point I missed at first was that so many of the players use gstreamer as a back end, so what looked like many bugs/configuration-problems was really one. Presumably I'd have to go through a similar process to get phonon working for juk.

So I had this brainstorm that I could get sticky labels approximately the right size and paste current gpg key info to the back of business cards. I played with glabels for a bit, but we didn't get along. I decided to hack something up based on the gpg-key2ps script in the signing-party package. I'm not proud of the current state; it is hard-coded for one particular kind of labels I have on my desk, but it should be easy to polish if anyone thinks this is and idea worth pursuing. The output looks like this. Note that the boxes are just for debugging.